Replies: 0

Hi all,

This week my HDFS ran out of space so I changed to HDFS location from /var/Hadoop to my new partition /Hadoop/Hadoop/…..

I copied the data over and all services where running fine again.

Except Hbase is not starting anymore…

When I start HBase I get no errors, after 5 seconds the ‘HBase master’ gets replaced by ‘Standby HBase master’ and 10 seconds later the ‘Hbase master’ jumps offline.

How can I solve this?

I get the following errors in my log file:

2014-06-06 16:03:03,740 INFO [RpcServer.listener,port=60000] ipc.RpcServer: RpcServer.listener,port=60000: stopping

2014-06-06 16:03:03,743 INFO [master:server01:60000] master.HMaster: Stopping infoServer

2014-06-06 16:03:03,743 INFO [master:server01:60000.archivedHFileCleaner] cleaner.HFileCleaner: master:server01:60000.archivedHFileCleaner exiting

2014-06-06 16:03:03,744 INFO [RpcServer.responder] ipc.RpcServer: RpcServer.responder: stopped

2014-06-06 16:03:03,745 INFO [master:server01:60000.oldLogCleaner] cleaner.LogCleaner: master:server01:60000.oldLogCleaner exiting

2014-06-06 16:03:03,745 INFO [RpcServer.responder] ipc.RpcServer: RpcServer.responder: stopping

2014-06-06 16:03:03,745 INFO [master:server01:60000.oldLogCleaner] master.ReplicationLogCleaner: Stopping replicationLogCleaner-0x1467147ae200012, quorum=server03.rdo01.local:2181,server01.rdo01.local:2181,server02.rdo01.local:2181, baseZNode=/hbase-unsecure

2014-06-06 16:03:03,746 INFO [master:server01:60000] mortbay.log: Stopped SelectChannelConnector@0.0.0.0:60010

2014-06-06 16:03:03,748 INFO [master:server01:60000.oldLogCleaner] zookeeper.ZooKeeper: Session: 0x1467147ae200012 closed

2014-06-06 16:03:03,748 INFO [master:server01:60000-EventThread] zookeeper.ClientCnxn: EventThread shut down

2014-06-06 16:03:03,854 DEBUG [master:server01:60000] catalog.CatalogTracker: Stopping catalog tracker org.apache.hadoop.hbase.catalog.CatalogTracker@23d72e0d

2014-06-06 16:03:03,854 INFO [master:server01:60000] client.ConnectionManager$HConnectionImplementation: Closing zookeeper sessionid=0x1467147ae200011

2014-06-06 16:03:03,856 INFO [master:server01:60000] zookeeper.ZooKeeper: Session: 0x1467147ae200011 closed

2014-06-06 16:03:03,856 INFO [master:server01:60000-EventThread] zookeeper.ClientCnxn: EventThread shut down

2014-06-06 16:03:03,856 INFO [server01.rdo01.local,60000,1402063374987.splitLogManagerTimeoutMonitor] master.SplitLogManager$TimeoutMonitor: server01.rdo01.local,60000,1402063374987.splitLogManagerTimeoutMonitor exiting

2014-06-06 16:03:03,858 INFO [master:server01:60000] zookeeper.ZooKeeper: Session: 0×346714989710014 closed

2014-06-06 16:03:03,858 INFO [main-EventThread] zookeeper.ClientCnxn: EventThread shut down

2014-06-06 16:03:03,858 INFO [master:server01:60000] master.HMaster: HMaster main thread exiting

2014-06-06 16:03:03,859 ERROR [main] master.HMasterCommandLine: Master exiting

java.lang.RuntimeException: HMaster Aborted

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:192)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:134)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:126)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:2889)

Greetings,

Merlijn

</p>

</p>

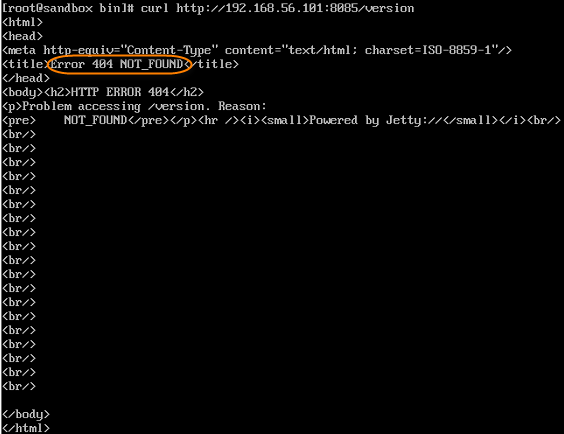

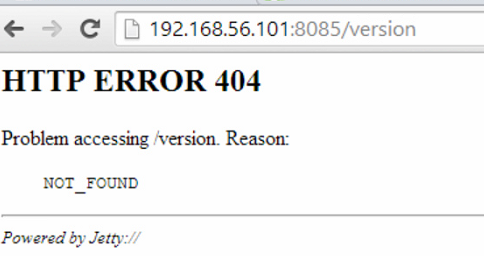

(I’ve highlighted what I think are the pertinent parts)

(I’ve highlighted what I think are the pertinent parts)